Image processing 1,000 times faster is goal of new $5M contract

Lu plans to design and fabricate a computer chip based on so-called self-organizing, adaptive neural networks.

Enlarge

Enlarge

ANN ARBOR – Loosely inspired by a biological brain’s approach to making sense of visual information, a University of Michigan researcher is leading a project to build alternative computer hardware that could process images and video 1,000 times faster with 10,000 times less power than today’s systems—all without sacrificing accuracy.

“With the proliferation of sensors, videos and images in today’s world, we increasingly run into the problem of having much more data than we can process in a timely fashion,” said Wei Lu, U-M associate professor of electrical engineering and computer science. “Our approach aims to change that.”

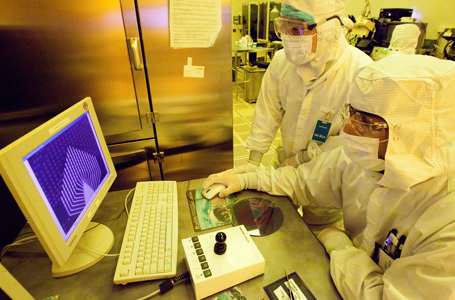

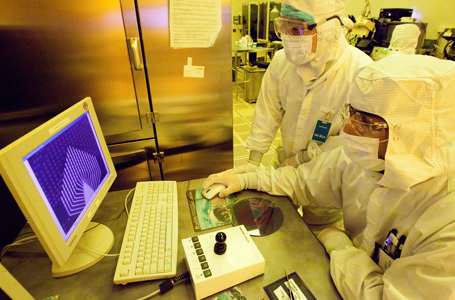

Lu has been awarded an up-to-$5.7 million contract from the Defense Advanced Research Projects Agency to design and fabricate a computer chip based on so-called self-organizing, adaptive neural networks. So far, Lu has received $1.3 million to begin work on the project.

The networks will be made of conventional transistors and innovative components called memristors that perform both logic and memory functions. Memristors are resistors with memory—electronic devices that regulate electric current based on the history of the stimuli applied to them.

Because of their multitasking abilities, researchers say they could enable new computing platforms that can process a vast number of signals in parallel and are capable of advanced machine learning. Systems that utilize them could be much more efficient than conventional computers in handling “big data” tasks such as analyzing images and video.

The new network will be designed to use a big-picture approach to image processing. Rather than painstakingly rendering pixel by pixel, as today’s computers do, the system would look at a whole image at once and identify deep structures in it through a process called inference.

“The idea is to recognize that most data in images or videos are essentially noise,” Lu said. “Instead of processing all of it or transmitting it fully and wasting precious bandwidth, adaptive neural networks can extract key features and reconstruct the images with a much smaller amount of data.”

Lu’s ultimate goal in this project is to build a network that uses the memristors as, essentially, artificial synapses between conventional circuits, which could be considered artificial neurons. The synapses in a biological brain are the gaps between neurons across which neurons send chemical or electrical signals.

In a simpler, alternate version of the new network, Lu plans to build a system that uses memristors as memory nodes along traditional wired connections between circuits (rather than using the memristors as the connections themselves) to improve the efficiency of the machine’s learning process.

After the systems are built, the researchers will train them to recognize common image features. They’ll load them with thousands of images—cars in a busy street, aerial images and handwritten characters, for example.

During training, the connections from the input images to the artificial neurons will evolve spontaneously, Lu said, and afterwards each neuron should be able to identify one particular feature or shape. Then, when presented with a similar feature in a fresh image, only the neurons that find their assigned pattern or shape would fire and transmit information.

The project is titled Sparse Adaptive Local Learning for Sensing and Analytics. Other collaborators are Zhengya Zhang and Michael Flynn of the U-M Department of Electrical Engineering and Computer Science, Garrett Kenyon of the Los Alamos National Lab and Christof Teuscher of Portland State University.

Enlarge

Enlarge

MENU

MENU