CSE Seminar

Towards 4D Reconstruction in the Wild

This event is free and open to the publicAdd to Google Calendar

Zoom link for remote attendees: password 123123

Abstract: With the advances in VR/AR hardware, we are entering an era of virtual presence. To populate the virtual world, one solution is to build digital copies of the real world in 4D (3D and time). However, existing methods for 4D reconstruction often require specialized sensors or body templates, making them less applicable to the diverse objects and scenes one may see in everyday life. In light of this, our goal is to reconstruct 4D structures from videos in the wild. Although the problem is challenging due to its under-constrained nature, recent advances in differentiable graphics and data-driven vision priors allow us to approach it in an analysis-by-synthesis framework. Under the generic vision, motion, and physics priors, our method searches for 4D structures that are faithful to video inputs via gradient-based optimization. Based on this framework, we present methods to reconstruct deformable objects and their surrounding scenes in 4D from in-the-wild video footage, which can be transferred to VR and robot platforms.

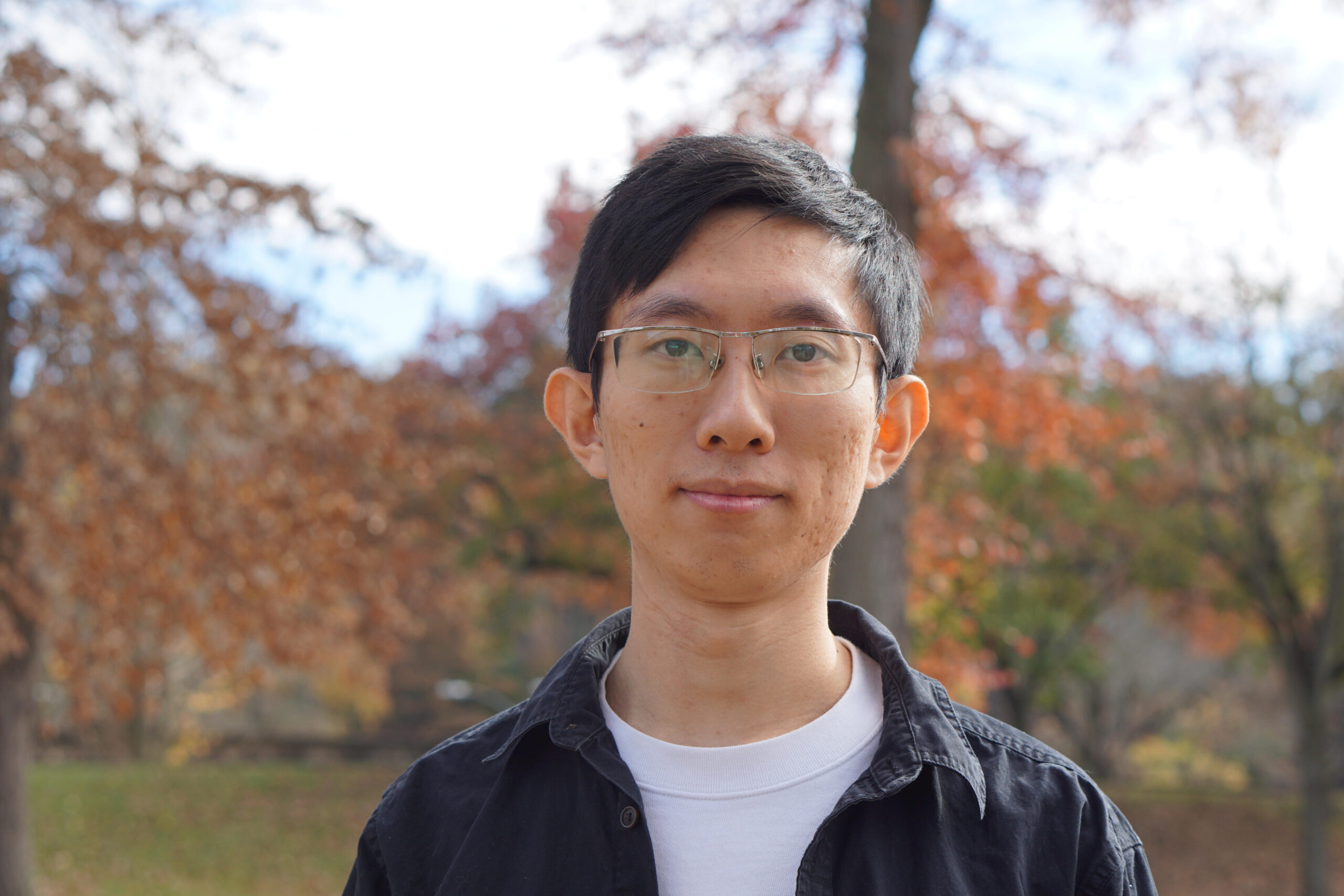

Bio: Gengshan Yang is a research scientist at Meta’s Reality Labs in Pittsburgh. He received his PhD in Robotics from Carnegie Mellon University, advised by Prof. Deva Ramanan. He is also a recipient of the 2021 Qualcomm Innovation Fellowship. His research is focused on 3D computer vision, particularly on inferring structures (e.g., 3D, motion, segmentation, physics) from videos.

MENU

MENU